Introduction

This is my reading notes for Yin J, Wang J’s A Dirichlet Multinomial Mixture model-based approach for short text clustering. I explained the main idea of this paper and summarized the paper with both the author’s explanations and my understanding. If you want to talk about this paper with me, please contact me at haroldliuj@gmail.com. Have a good trip!

Main Idea

Gibbs Sampling for Dirichlet Multinomial Mixture(GSDMM) is based on Dirichlet Multinomial Mixture. The main idea of this process can be seen as a Movie Group Process, that is, assume a professor of a movie discussion course plans to divide the students into several groups. She expects the students in the same group have watched more movies of the same, so they would have more things to discuss. The professor asks the students to write down the movies they have watched within several minutes.

The goal is that students in the same group will share similar interests (similar movie lists), while students in different groups will share different interests.

First, she or he will randomly assign students to some tables, then she will relocate students with 2 rules.

There are 2 rules for this process:

- Rule 1: Choose a table with more student

- Rule 2: Choose a table whose students share similar interests (i.e., watched more movies of the same) with him

Rule 1 can achieve high Completeness, that is, all members of a ground true group are assigned to the same cluster

Rule 2 can achieve high Homogeneity, that is, each cluster contains only members of a single ground true group

Foundations

1. Dirichlet Multinomial Mixture

This is a generative model for documents with 2 steps:

Select a cluster $k$ according to the probability distribution of the cluster $p(z=k)$, which is a multinomial distribution with a Dirichlet prior

Document $d$ is generated by the selected cluster from distribution $p(d|z = k)$, which is also a multinomial distribution with a Dirichlet prior

So the likelihood of the document is:

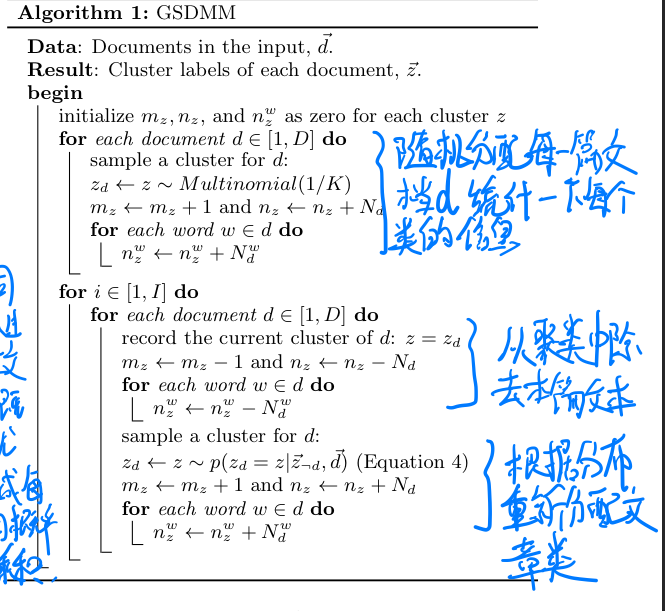

2. Gibbs Sampling for DMM

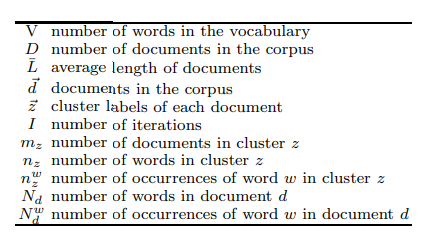

Notations:

More formally, the mathematical expression for the probability distribution of the document $d$ in cluster $z$ is:

where $\vec{z}_{\neg d}$ is means the cluster label of document d is removed from $\vec{z}$ and $K$ is the initial number of clusters. $m_{z,\neg d}$ is the number of documents in table $z$ without considering document $d$. $\alpha$ and $\beta$ is the hyperparameters of the algorithm.

This first part of this expression is corresponding to rule 1 and the second part is corresponding to rule 2.

Meaning of $\alpha$ and $\beta$

$\alpha$ controls the probability that a student will join a table that is currently empty When alpha is 0, no one will join an empty table.

$\beta$ controls the student’s affinity for other students with similar interests. A low beta means that students desire to sit with students of similar interests. A high beta means they are less concerned with affinity and are more influenced by the popularity of a table

Label Word Generating for Clusters

- the probability of word $w$ being generated by cluster $z$ is: